Some important indicator LTE drive test parameters:

1. RSRP : Reference Signal Received Power.

2. RSRQ : Reference Signal Received Quality.

3. RSSI : Received Signal Strength Indicator.

4. SINR : Signal to Interference Noise Ratio.

5. CQI : Channel Quality Index.

6. PCI : Physical Cell Identity.

7. BLER: Block Error Ratio.

8. DL Throughput : Down Link Throughput.

9. UL Throughput : Up Link Throughput

2. RSRQ : Reference Signal Received Quality.

3. RSSI : Received Signal Strength Indicator.

4. SINR : Signal to Interference Noise Ratio.

5. CQI : Channel Quality Index.

6. PCI : Physical Cell Identity.

7. BLER: Block Error Ratio.

8. DL Throughput : Down Link Throughput.

9. UL Throughput : Up Link Throughput

This is the common key performance parameters for LTE drive test parameter we have to work out for LTE drive test task.

1. RSRP:

RSRP – The average power received from a single Reference signal, and Its typical range is around -44dbm (good) to -140dbm(bad).

RSRP (dBm) = RSSI (dBm) – 10*log (12*N)

2. RSRQ:

RSRQ – Indicates quality of the received signal, and its range is typically -19.5dB(bad) to -3dB (good).

Reference Signal Received Quality (RSRQ) is defined as the ratio N×RSRP/(E-UTRA carrier RSSI),

where N is the number of RB’s of the E-UTRA carrier RSSI measurement bandwidth.

The measurements in the numerator and denominator shall be made over the same set of resource blocks.

where N is the number of RB’s of the E-UTRA carrier RSSI measurement bandwidth.

The measurements in the numerator and denominator shall be made over the same set of resource blocks.

3. RSSI:

RSSI – Represents the entire received power including the wanted power from the serving cell as well as all co-channel power and other sources of noise and it is related to the above parameters through the following formula:

RSRQ=N*(RSRP/RSSI)

Where N is the number of Resource Blocks of the E-UTRA carrier RSSI measurement bandwidth.

RSSI (Received Signal Strength Indicator) is a parameter which provides information about total received wide-band power (measure in all symbols) including all interference and thermal noise. RSSI is not reported to e-NodeB by UE. It can simply be computed from RSRQ and RSRP that are, instead, reported by UE.

RSSI = wideband power = noise + serving cell power + interference power

So, without noise and interference, we have that 100% DL PRB activity: RSSI=12*N*RSRP

RSSI (Received Signal Strength Indicator) is a parameter which provides information about total received wide-band power (measure in all symbols) including all interference and thermal noise. RSSI is not reported to e-NodeB by UE. It can simply be computed from RSRQ and RSRP that are, instead, reported by UE.

RSSI = wideband power = noise + serving cell power + interference power

So, without noise and interference, we have that 100% DL PRB activity: RSSI=12*N*RSRP

Where:

- RSRP is the received power of 1 RE (3GPP definition) average of power levels received across all Reference Signal symbols within the considered measurement frequency bandwidth

- RSSI is measured over the entire bandwidth

- N, number of RBs across the RSSI, is measured and depends on the BW

4. SINR:

SINR is the reference value used in the system simulation and can be defined:

- Wide band SINR

- SINR for a specific sub-carriers (or for a specific resource elements)

RSSP vs RSRQ vs RSSI vs SINR

Below is a chart that shows what values are considered good and bad for the LTE signal strength values:

Reference Signals recap: OFDMA Channel Estimation

In simple terms the Reference Signal (RS) is mapped to Resource Elements (RE). This mapping follows a specific pattern (see to below).

- So at any point in time the UE will measure all the REs that carry the RS and average the measurements to obtain an RSRP reading.

- Channel estimation in LTE is based on reference signals (like CPICH functionality in WCDMA)

- Reference signals position in time domain is fixed (0 and 4 for Type 1 Frame) whereas in frequency domain it depends on the Cell ID

- In case more than one antenna is used (e.g. MIMO) the Resource elements allocated to reference signals on one antenna are DTX on the other antennas

- Reference signals are modulated to identify the cell to which they belong

Impact of serving cell power to RSRQ:

Example for noise limited case (no interference): If all resource elements are active and are transmitted with equal power, then

- RSRQ = N / 12N = -10.8 dB for 1Tx

- RSRQ = N / 20N = -13 dB for 2Tx taking DTX into account

(because RSRP is measured over 1 resource element and RSSI per resource block is measured over 12 resource elements).

Remember that RSSI is only measured at those symbol times during which RS REs are transmitted – We do not have to take into the count DTx!!!

So, when there is no traffic, and assuming only the reference symbols are transmitted (there are 2 of them within the same symbol of a resource block) from a single Tx antenna then the RSSI is generated by only the 2 reference symbols so the result becomes

So, when there is no traffic, and assuming only the reference symbols are transmitted (there are 2 of them within the same symbol of a resource block) from a single Tx antenna then the RSSI is generated by only the 2 reference symbols so the result becomes

- RSRQ = N / 2N = -3 dB for 1Tx

- RSRQ = -6dB for 2Tx

SNR vs. RSRP

RSRP is measured for a single subcarrier, noisepower for 15KHz= -125.2dBm

- Noise figure = 7 dB

- Temperature = 290 K

Assumption: RSRP doesn’t contain noise power

Power Calculation Example

Lets try to calculate RSRP, RSSI and RSRQ for one very simple case of one resource block with 12 sub carriers and 0.5 ms in time domain. Let’s assume the power of reference symbols (shown by red square) and power of other symbols carrying other data channels (shown by blue square) is same i.e. 0.021 watt

Since RSRP is linear average of downlink reference signal for given channel bandwidth therefore

RSRP = 10*log (0.021*1000) = 13.2 dBm

While RSSI is total received wide-band power. Therefore we have to add power of all 12 carriers in the given resource block

RSSI = 10*log(0.021*1000)+10*log(12) = 24 dBm

RSRQ is now simple ratio of RSRP to RSSI with N=1

RSRQ = 10*log(0.021/(12*0.021)) = -10.79 dB

Understanding dBm vs dB

dB is ratio between two power values while dBm is used to express an absolute value of power. So when we mention RSRP and RSSI we shall always use dBm since we are talking about absolute power values but we need to use dB with RSRQ since it is the ratio of RSRP to RSSI

5. CQI:

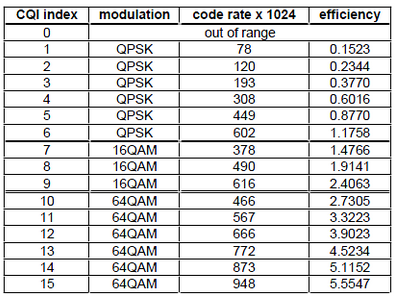

The Channel Quality Indicator (CQI) contains information sent from a UE to the eNode-B to indicate a suitable downlink transmission data rate, i.e., a Modulation and Coding Scheme (MCS) value. CQI is a 4-bit integer and is based on the observed signal-to-interference-plus-noise ratio (SINR) at the UE. The CQI estimation process takes into account the UE capability such as the number of antennas and the type of receiver used for detection. This is important since for the same SINR value the MCS level that can be supported by a UE depends on these various UE capabilities, which needs to be taken into account in order for the eNode-B to select an optimum MCS level for the transmission. The CQI reported values are used by the eNode-B for downlink scheduling and link adaptation, which are important features of LTE.

In LTE, there are 15 different CQI values randing from 1 to 15 and mapping between CQI and modulcation scheme, transport block size is defined as follows (36.213)

6. PCI:

Cell ID sets the physical (PHY) layer Cell ID. This PHY-layer Cell ID determines the Cell ID Group and Cell ID Sector. There are 168 possible Cell ID groups and 3 possible Cell ID sectors; therefore, there are 3 * 168 = 504 possible PHY-layer cell IDs. When Cell ID is set to Auto, the demodulator will automatically detect the Cell ID. When Cell ID is set to Manual, the PHY-layer Cell ID must be specified for successful demodulation.

The physical layer cell id can be calculated from the following formula:

The physical layer cell id can be calculated from the following formula:

PHY-layer Cell ID = 3*(Cell ID Group) + Cell ID Sector

When Sync Type is set to C-RS, the Cell ID Auto selection will be disabled, and Cell ID must be specified manually. This is because the demodulator needs to know the values of the C-RS sequence to use for synchronization and because Cell ID determines these values. See RS-PRS for more information.

7. BLER:

3GPP TS 34.121, F.6.1.1 defines block error ratio (BLER) as follows: "A Block Error Ratio is defined as the ratio of the number of erroneous blocks received to the total number of blocks sent. An erroneous block is defined as a Transport Block, the cyclic redundancy check (CRC) of which is wrong."

8/9. DL/UL Throughput:

assume a 2×5 MHz LTE system. We first calculate the number of resource elements (RE) in a subframe (a subframe is 1 msec):

12 Subcarriers x 7 OFDMA Symbols x 25 Resource Blocks x 2 slots = 4,200 REs

Then we calculate the data rate assuming 64 QAM with no coding (64QAM is the highest modulation for downlink LTE):

6 bits per 64QAM symbol x 4,200 Res / 1 msec = 25.2 Mbps

The MIMO data rate is then 2 x 25.2 = 50.4 Mbps. We now have to subtract the overhead related to control signaling such as PDCCH and PBCH channels, reference & synchronization signals, and coding. These are estimated as follows:

The total approximate overhead for the 5 MHz channel is 17.86% + 4.76% + 2.6% = 25.22%.

The peak data rate is then 0.75 x 50.4 Mbps = 37.8 Mbps.

Note that the uplink would have lower throughput because the modulation scheme for most device classes is 16QAM in SISO mode only.

There is another technique to calculate the peak capacity which I include here as well for a 2×20 MHz LTE system with 4×4 MIMO configuration and 64QAM code rate 1:

Downlink data rate:

Downlink data rate = 4 x 6 bps/Hz x 20 MHz x (1-14.29%) x (1-10%) x (1-6.66%) x (1-10%) = 298 Mbps.

Uplink data rate:

1 Tx antenna (no MIMO), 64 QAM code rate 1 (Note that typical UEs can support only 16QAM)

Uplink data rate = 1 * 6 bps/Hz x 20 MHz x (1-14.29%) x (1-0.625%) x (1-6.66%) x (1-10%) = 82 Mbps.

Alternative to these methods, one can refer to 3GPP document 36.213, Table 7.1.7.1-1, Table 7.1.7.2.1-1 and Table 7.1.7.2.2-1 for more accurate calculations of capacity.

To conclude, the LTE capacity depends on the following:

12 Subcarriers x 7 OFDMA Symbols x 25 Resource Blocks x 2 slots = 4,200 REs

Then we calculate the data rate assuming 64 QAM with no coding (64QAM is the highest modulation for downlink LTE):

6 bits per 64QAM symbol x 4,200 Res / 1 msec = 25.2 Mbps

The MIMO data rate is then 2 x 25.2 = 50.4 Mbps. We now have to subtract the overhead related to control signaling such as PDCCH and PBCH channels, reference & synchronization signals, and coding. These are estimated as follows:

- PDCCH channel can take 1 to 3 symbols out of 14 in a subframe. Assuming that on average it is 2.5 symbols, the amount of overhead due to PDCCH becomes 2.5/14 = 17.86 %.

- Downlink RS signal uses 4 symbols in every third subcarrier resulting in 16/336 = 4.76% overhead for 2×2 MIMO configuration

- The other channels (PSS, SSS, PBCH, PCFICH, PHICH) added together amount to ~2.6% of overhead

The total approximate overhead for the 5 MHz channel is 17.86% + 4.76% + 2.6% = 25.22%.

The peak data rate is then 0.75 x 50.4 Mbps = 37.8 Mbps.

Note that the uplink would have lower throughput because the modulation scheme for most device classes is 16QAM in SISO mode only.

There is another technique to calculate the peak capacity which I include here as well for a 2×20 MHz LTE system with 4×4 MIMO configuration and 64QAM code rate 1:

Downlink data rate:

- Pilot overhead (4 Tx antennas) = 14.29%

- Common channel overhead (adequate to serve 1 UE/subframe) = 10%

- CP overhead = 6.66%

- Guard band overhead = 10%

Downlink data rate = 4 x 6 bps/Hz x 20 MHz x (1-14.29%) x (1-10%) x (1-6.66%) x (1-10%) = 298 Mbps.

Uplink data rate:

1 Tx antenna (no MIMO), 64 QAM code rate 1 (Note that typical UEs can support only 16QAM)

- Pilot overhead = 14.3%

- Random access overhead = 0.625%

- CP overhead = 6.66%

- Guard band overhead = 10%

Uplink data rate = 1 * 6 bps/Hz x 20 MHz x (1-14.29%) x (1-0.625%) x (1-6.66%) x (1-10%) = 82 Mbps.

Alternative to these methods, one can refer to 3GPP document 36.213, Table 7.1.7.1-1, Table 7.1.7.2.1-1 and Table 7.1.7.2.2-1 for more accurate calculations of capacity.

To conclude, the LTE capacity depends on the following:

- Channel bandwidth

- Network loading: number of subscribers in a cell which impacts the overhead

- The configuration & capability of the system: whether it’s 2×2 MIMO, SISO, and the MCS scheme.